- Dissecting the Markets

- Posts

- The AI Infrastructure Paradox: Why Overbuilding Will Create the Next Market Boom

The AI Infrastructure Paradox: Why Overbuilding Will Create the Next Market Boom

How excess GPU capacity and consolidation will democratize AI development and unlock generational wealth creation

Amid all the flashy headlines of Big Tech, OpenAI, and other AI startups spending big on capital expenditures (capex) to build AI infrastructure, one has to ask, what is the ROI for all that capex spending, and where does it come from?

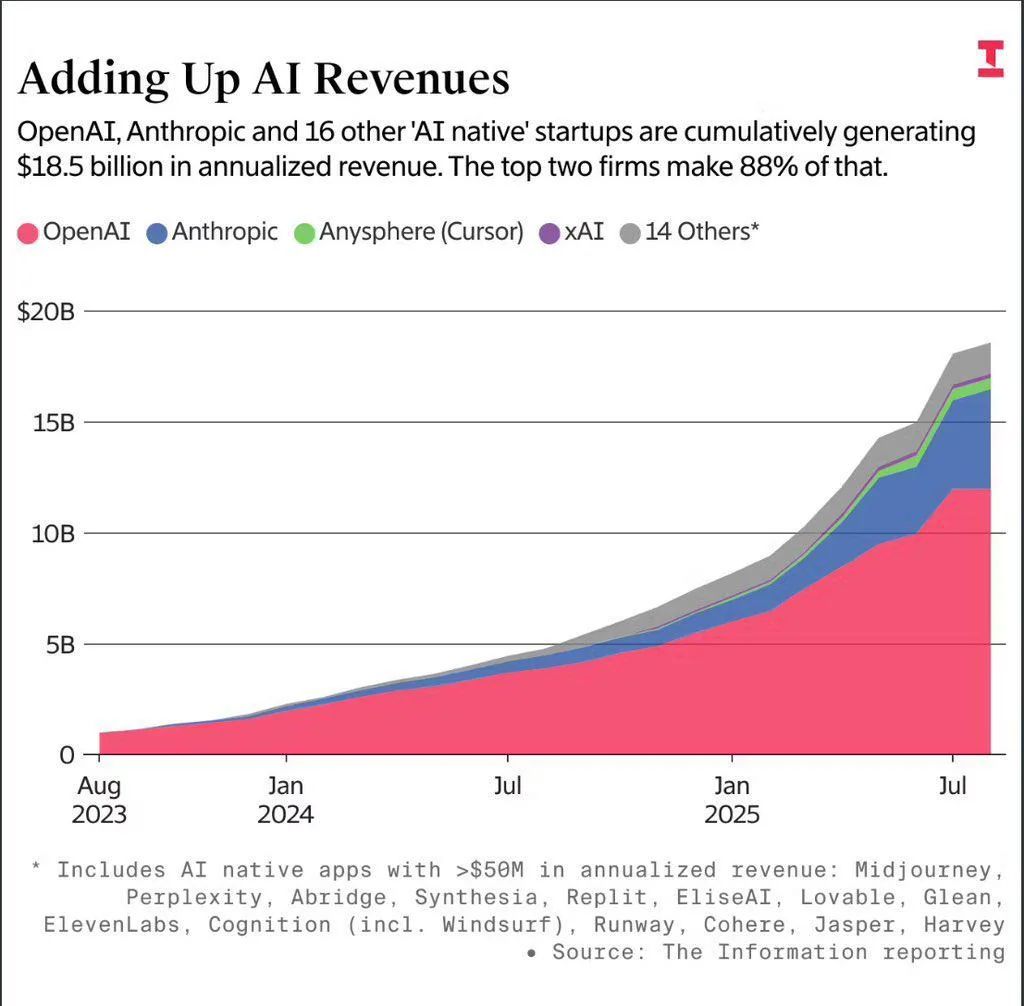

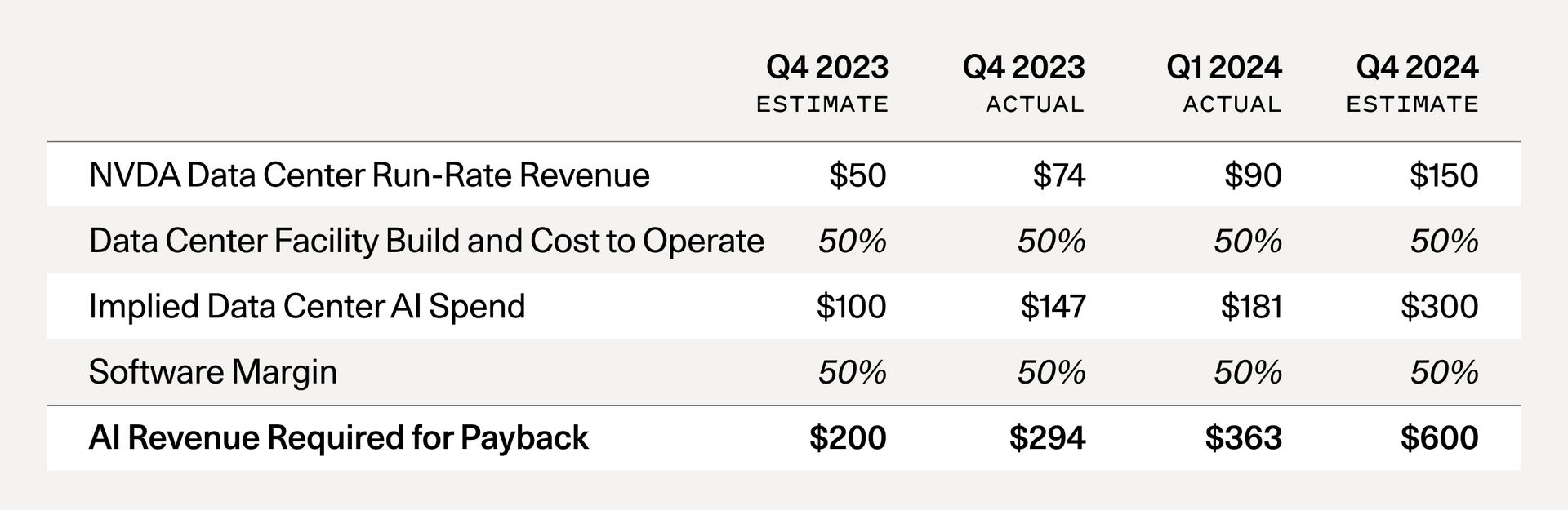

For those who remember the bad year $META ( ▲ 0.32% ) had in 2022 from their rampant metaverse spending, the massive AI Big Tech is doing since the release of ChatGPT echoes those excitements and concerns. Sequoia published an article called AI’s $600B Question, which questions where all the revenues needed to justify the high capex spending are coming from.

In the article, there’s a table that the author made that calculates the AI revenue required to justify the capex spending. The calculation works like this: first, you take Nvidia's projected revenue and double it to account for the full cost of AI data centers. This doubling is necessary because GPUs represent only half the total cost of ownership, and the other half covers energy, buildings, backup generators, and other infrastructure. Then you double that figure again to account for the profit margins needed by cloud providers like Azure, AWS, or Google Cloud, who must mark up their services to make money when selling AI compute to startups and businesses.

From Q4’23 to today, things have changed in the AI landscape. GPUs went from being scarce to being easier to get. Big Tech and other tech players are stockpiling GPUs. OpenAI continues to have the majority of AI revenue. While demand for Nvidia’s newest chip line, Blackwell, is high, there doesn’t seem to be any news of clients struggling to source Blackwell chips.

While some will compare the AI buildout to building the railroad during the Industrial Revolution, there are key differences investors need to know. First, unlike the railroad, where there’s potential for pricing power due to the fact that there is limited space to lay railroad tracks, GPU data centers can be built anywhere and are treated like commoditized assets. Many players are building GPU data centers, which is different than the CPU cloud, which is run by an oligopoly that consists of Amazon, Google, and Microsoft.

As for depreciation, the market is underdepreciating GPU data centers. Innovation in semiconductors is rapid, and the value of the Nvidia H100 chips, which were in high demand back in 2023 after the release of ChatGPT, has rapidly decreased once Nvidia released the B100 chip, whose capabilities are multiples higher. Also, GPUs have shorter lifespans than traditional assets due to high workloads and the rapid pace of AI innovation, rendering assets obsolete faster. While Big Tech has extended the depreciation period for its server and network equipment over the past five years, Amazon was an exception when it decided to reduce the useful lives of its equipment from six years to five years.

There’s a big risk for overbuilding. Many players are building GPU data centers outside of Big Tech and AI startups, like:

Coreweave

Nebius

Applied Digital

IREN Ltd.

Bitfarms

Core Scientific

Marathon Digital

Riot Platforms

Lambda Labs

many more

In this period of excessive infrastructure building, there are going to be winners. Once the hype and need for infrastructure building cools down, the ones who’ll be hurt the most are investors. The overbuilding of GPU data centers will push the price of compute lower and make many of these projects unprofitable. For Bitcoin miners who chose to jump on the AI hype, the pain will be higher since they have fewer financial resources than Big Tech. But for startups, lower compute prices benefit them as the cost of innovation and providing services to customers will be lower.

You might be thinking, why would companies be willing to invest a lot in an endeavor that may lead to huge write-offs? This question not only applies to Big Tech but also to smaller firms as well. The debate about CapEx spending on AI infrastructure is about speed, not magnitude. The more you believe in AI, the more you might be concerned that AI model progress will outpace physical infrastructure, leaving the latter outdated. That’s what many are thinking.

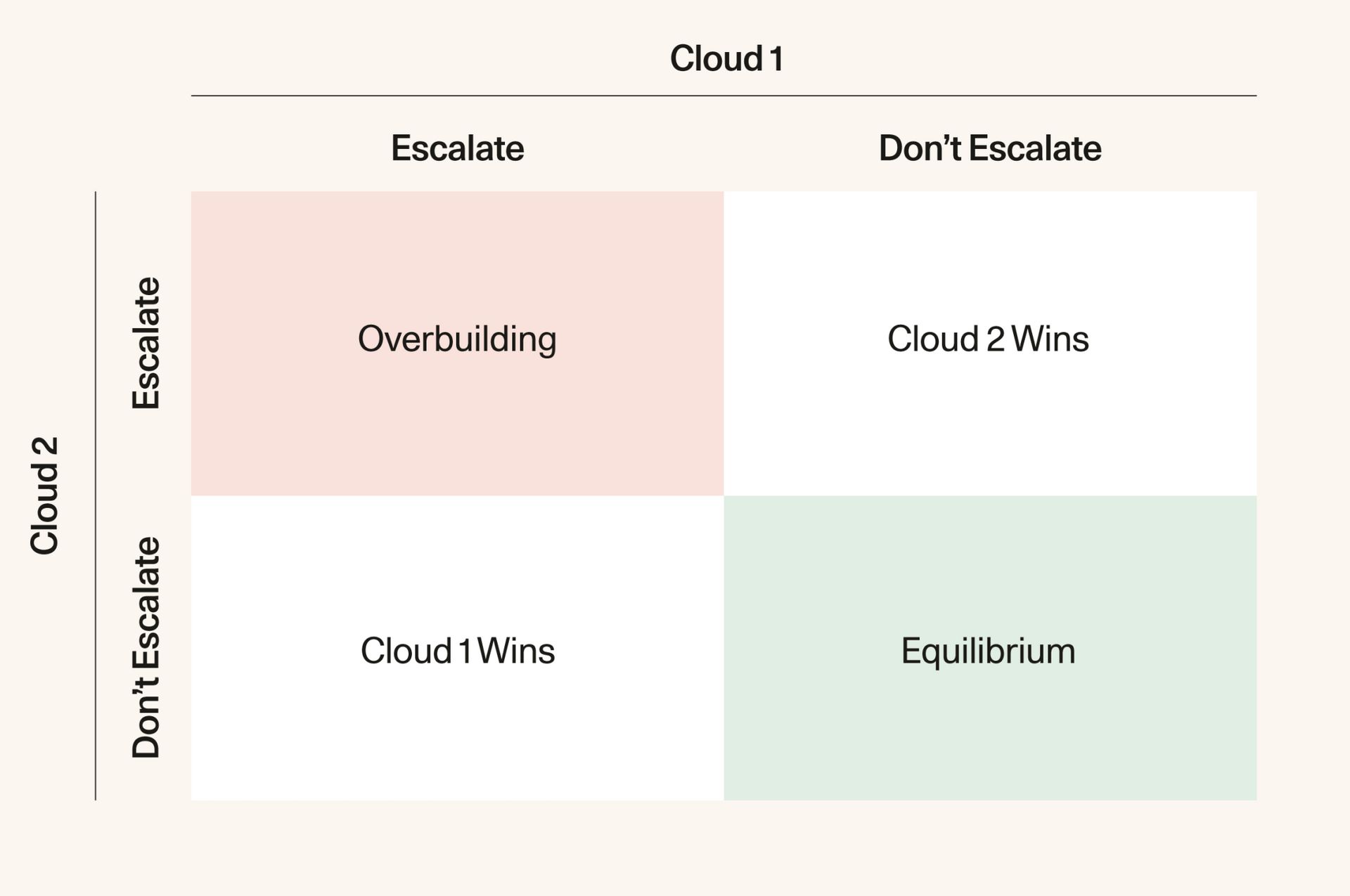

Looking at the chart below, you can understand why we are destined for overbuilding. If one company chooses to invest more in AI CapEx and the other doesn’t, the one who invests more in CapEx wins. For Big Tech, they’re cash-rich, have diversified businesses, and they’re always making sure they’re at the frontier of the next stage in the tech world. They don’t want to give their competitor the chance to win, and that’s why they’ll ramp up CapEx spending if they see their competitor ramping up CapEx spending.

For smaller players, the urgency is higher as they don’t want Big Tech to own all the AI infrastructure. But with all the overbuilding that Big Tech and other cash-rich competitors are doing, smaller players stand to benefit immensely in the long run. The abundance of compute capacity coming online will push compute prices lower, and all the risks that come with AI infrastructure buildout will stay with Big Tech, not them.

Why Apple Isn’t Joining the AI CapEx Frenzy?

I wrote a thread on this topic last year. You can learn the answer here.

1/ $AAPL is playing 4D chess in the AI race while everyone else is playing checkers. Here's how they're approaching AI differently from the rest of Big Tech 🧵

— Dissecting the Markets | See pinned tweet (@dissectmarkets)

4:34 AM • Aug 2, 2024

AI Monetization

Besides the subscriptions that OpenAI, Anthropic, Google Gemini, and others are offering, many AI developers are in the early stages of monetizing their AI models in other ways.

For Perplexity, they’re running ads on their LLM. The CPM prices for LLM ads are around $50, which is way higher than the CPM for display ads on desktop, which is $2.50, and the CPM for mobile video ads, which is $11.50.

Amazon is looking to charge $5 to $10 a month to customers for an advanced version of Alexa.

For Meta, while I rarely see ads on my Meta AI queries, they have seen significant returns on AI CapEx spending for their advertising business. Meta AI is being used to improve the efficiency and personalization of its advertising platform, allowing businesses to create better ad campaigns. Advertisers on the platform have seen higher engagement results, and because of it, they pump more money into the platform. Plus, those AI tools help small businesses compete against bigger businesses, which brings even more money to the platform.

Google is already making billions on its AI products. That mainly comes in the form of consumption, where enterprise customers pay by the amount of compute they purchase from GCP. Then it also has the Gemini subscriptions, which enterprises and consumers pay for to do things like AI-generated videos and more. And finally, there’s upselling, where GCP upsells better AI models and more compute to its existing customers.

For many of the Big Tech companies that sell productivity tools, AI will allow them to make their tools better and help justify the price increases they’ll make to the cost of those tools. Microsoft 365 got a 45% price hike because of all the AI tools they added to the service, which is one example of this.

Conclusion

While GPU data center overbuilding presents clear risks, I'm bullish because this excess capacity will unleash a wave of innovation as compute costs plummet. The current Big Tech arms race stems from their fear of being left behind, but the real winners will be those who leverage abundant, cheap compute to create entirely new market categories. We're heading toward a period where AI development barriers collapse, democratizing access to capabilities that only tech giants can afford today.

The monetization breakthroughs we're seeing—from Perplexity's $50 CPM ad rates to Microsoft's 45% AI-driven price increases—are just the beginning. Looking ahead, I expect entirely new business models to emerge: outcome-based pricing, AI agents participating directly in transactions, and digital labor markets we can't yet imagine.

Most importantly, we're witnessing AI's transition from cost center to primary value creation engine. The $600 billion question isn't whether revenues will materialize—it's how quickly they'll exceed projections. As AI becomes essential business infrastructure, every company must participate or risk obsolescence. This isn't just another tech cycle; it's the foundation for the next economic era, where today's positioning determines decades of disproportionate value capture.